Dragonfly Capital 合伙人:去中心化推理的信任问题与验证挑战

作者:Haseeb Qureshi,Dragonfly Capital 合伙人 来源:medium 翻译:善欧巴,比特币买卖交易网

假设你想要运行像 Llama2–70B 这样的大型语言模型。如此庞大的模型需要超过 140GB 的内存,这意味着你无法在家用计算机上运行原始模型。你有什么选择?你可能会跳到云提供商,但你可能不太热衷于信任单个中心化公司来为你处理此工作负载并收集所有使用数据。那么你需要的是去中心化推理,它可以让你在不依赖任何单一提供商的情况下运行机器学习模型。

信任问题

在去中心化网络中,仅仅运行模型并信任输出是不够的。假设我要求网络使用 Llama2-70B 分析治理困境。我怎么知道它实际上没有使用 Llama2–13B,给我提供了更糟糕的分析,并将差额收入囊中?

在中心化的世界中,你可能会相信像 OpenAI 这样的公司会诚实地这样做,因为他们的声誉受到威胁(在某种程度上,LLM 的质量是不言而喻的)。但在去中心化的世界中,诚实不是假设的——而是经过验证的。

这就是可验证的推论发挥作用的地方。除了提供对查询的响应之外,你还可以证明它在你要求的模型上正确运行。但如何呢?

最简单的方法是将模型作为链上智能合约运行。这肯定会保证输出得到验证,但这非常不切实际。GPT-3 表示嵌入维度为 12,288 的单词。如果你要在链上进行一次如此规模的矩阵乘法,按照当前的 Gas 价格计算,将花费约 100 亿美元——计算将连续大约一个月填满每个区块。

所以不行。我们需要一种不同的方法。

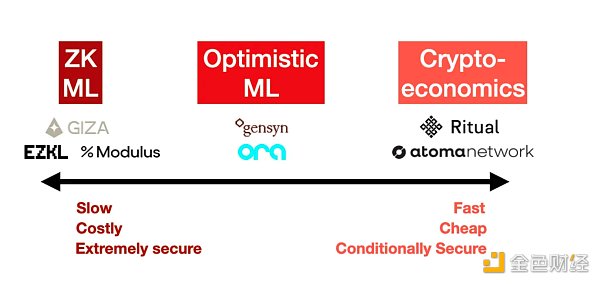

在观察了整个情况之后,我很清楚已经出现了三种主要方法来解决可验证的推理:零知识证明、乐观欺诈证明和加密经济学。每个都有其自己的安全性和成本影响。

1.零知识证明(ZK ML)

想象一下,能够证明你运行了一个大型模型,但无论模型有多大,证明实际上都是固定大小的。这就是 ZK ML 通过 ZK-SNARK 的魔力所承诺的。

虽然原则上听起来很优雅,但将深度神经网络编译成零知识电路并进行证明是极其困难的。它的成本也非常高——至少,你可能会看到1000 倍的推理成本和 1000 倍的延迟(生成证明的时间),更不用说在这一切发生之前将模型本身编译成电路了。最终,该成本必须转嫁给用户,因此对于最终用户而言,这最终将非常昂贵。

另一方面,这是通过密码学保证正确性的唯一方法。有了ZK,模型提供者无论多么努力都无法作弊。但这样做的成本巨大,使得在可预见的未来对于大型模型来说这是不切实际的。

示例:EZKL、Modulus Labs、Giza

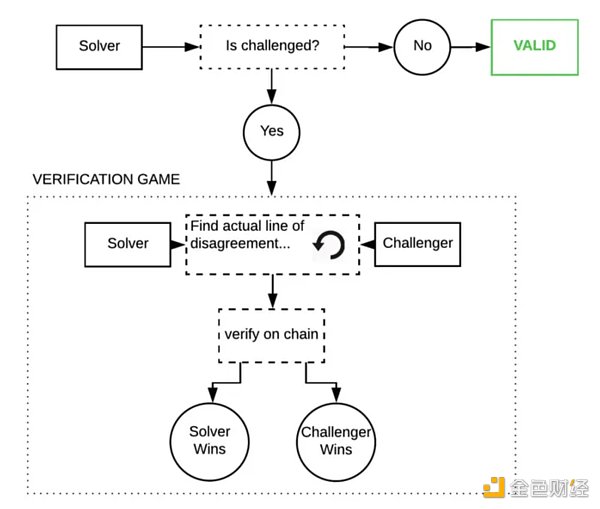

2.乐观欺诈证明(Optimistic ML)

乐观的方法是信任,但要验证。除非另有证明,否则我们假设推论是正确的。如果一个节点试图作弊,网络中的“观察者”可以指出作弊者并使用欺诈证明来挑战他们。这些观察者必须始终观察链并在自己的模型上重新运行推理,以确保输出正确。

这些欺诈证明是Truebit 风格的交互式挑战-响应游戏,你可以在链上反复平分模型执行轨迹,直到找到错误。

如果这种情况真的发生,其成本将非常高昂,因为这些程序非常庞大并且具有巨大的内部状态——单个 GPT-3 推理的成本约为1 petaflop(10^5 浮点运算)。但博弈论表明这种情况几乎永远不会发生(众所周知,欺诈证明很难正确编码,因为代码几乎永远不会在生产中受到攻击)。

乐观的好处是,只要有一个诚实的观察者在关注,机器学习就是安全的。成本比 ZK ML 便宜,但请记住,网络中的每个观察者都会自行重新运行每个查询。在均衡状态下,这意味着如果有 10 个观察者,则安全成本必须转嫁给用户,因此他们将必须支付超过 10 倍的推理成本(或者无论观察者有多少)。

与乐观汇总一样,缺点是你必须等待挑战期过去才能确定响应已得到验证。不过,根据网络参数化的方式,你可能需要等待几分钟而不是几天。

示例:Ora、Gensyn(尽管目前未指定)

3.加密经济学(加密经济ML)

在这里,我们放弃所有花哨的技术,做简单的事情:股权加权投票。用户决定应该有多少个节点运行他们的查询,每个节点都会显示他们的响应,如果响应之间存在差异,那么奇怪的节点就会被削减。标准的预言机东西——这是一种更直接的方法,可以让用户设置他们想要的安全级别,平衡成本和信任。如果 Chainlink 正在做机器学习,他们就会这样做。

这里的延迟很快——你只需要每个节点的提交-显示。如果将其写入区块链,那么从技术上讲,这可能会发生在两个区块中。

然而,安全性是最薄弱的。如果足够狡猾,大多数节点可以理性地选择共谋。作为用户,你必须推断这些节点的风险有多大以及作弊将花费多少成本。也就是说,使用 Eigenlayer 重新抵押和可归因安全之类的东西,网络可以在安全失败的情况下有效地提供保险。

但该系统的优点在于用户可以指定他们想要的安全程度。他们可以选择在仲裁中包含 3 个节点或 5 个节点,或者网络中的每个节点 - 或者,如果他们想要 YOLO,他们甚至可以选择 n=1。这里的成本函数很简单:用户为他们想要的法定数量的节点付费。如果选择 3,则需要支付 3 倍的推理成本。

这里有一个棘手的问题:你能让 n=1 安全吗?在一个简单的实现中,如果没有人检查,一个单独的节点应该每次都作弊。但我怀疑,如果你对查询进行加密并通过意图进行付款,你可能能够向节点混淆它们实际上是唯一响应此任务的节点。在这种情况下,你可能可以向普通用户收取不到 2 倍的推理成本。

最终,加密经济方法是最简单、最容易,也可能是最便宜的,但它是最不性感的,原则上也是最不安全的。但一如既往,细节决定成败。

示例:Ritual(尽管目前未具体说明)、Atoma Network

为什么可验证的机器学习很难

你可能想知道为什么我们还没有拥有这一切?毕竟,从本质上来说,机器学习模型只是非常大型的计算机程序。长期以来,证明程序正确执行一直是区块链的基础。

这就是为什么这三种验证方法反映了区块链保护其区块空间的方式——ZK rollups 使用 ZK 证明,optimistic rollups 使用欺诈证明,而大多数 L1 区块链使用加密经济学。毫不奇怪,我们得出了基本相同的解决方案。那么,是什么让这在应用于机器学习时变得困难呢?

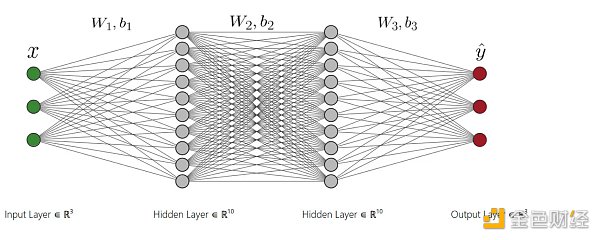

ML 是独一无二的,因为 ML 计算通常表示为密集计算图,旨在在 GPU 上高效运行。它们并不是为了被证明而设计的。因此,如果你想在 ZK 或乐观环境中证明 ML 计算,则必须以使其成为可能的格式重新编译——这是非常复杂且昂贵的。

机器学习的第二个基本困难是不确定性。程序验证假设程序的输出是确定性的。但如果你在不同的 GPU 架构或 CUDA 版本上运行相同的模型,你将得到不同的输出。即使你必须强制每个节点使用相同的架构,你仍然会遇到算法中使用的随机性问题(扩散模型中的噪声,或 LLM 中的令牌采样)。你可以通过控制RNG种子来修复随机性。但即便如此,你仍然面临最后一个威胁性问题:浮点运算固有的不确定性。

GPU 中的几乎所有运算都是在浮点数上完成的。浮点很挑剔,因为它们不具有关联性——也就是说,对于浮点来说 (a + b) + c 并不总是与 a + (b + c) 相同。由于 GPU 是高度并行化的,因此每次执行时加法或乘法的顺序可能会有所不同,这可能会导致输出出现微小差异。考虑到单词的离散性质,这不太可能影响 LLM 的输出,但对于图像模型来说,它可能会导致像素值略有不同,从而导致两个图像无法完美匹配。

这意味着你要么需要避免使用浮点,这意味着对性能的巨大打击,要么你需要在比较输出时允许一些宽松。无论哪种方式,细节都是复杂的,你无法完全将它们抽象出来。(事实证明,这就是为什么 EVM不支持浮点数,尽管NEAR等一些区块链支持浮点数。)

简而言之,去中心化推理网络很难,因为所有细节都很重要,而现实的细节数量惊人。

结语

目前,区块链和机器学习显然有很多共同之处。一种是创造信任的技术,另一种是迫切需要信任的技术。虽然每种去中心化推理方法都有其自身的权衡,但我非常有兴趣了解企业家如何使用这些工具来构建最好的网络。

Assuming that you want to run a large language model like this, such a huge model needs more memory, which means that you can't run the original model on your home computer. What choice do you have? You may jump to the cloud provider, but you may not be too keen to trust a single centralized company to handle this workload and collect all the usage data for you, then what you need is decentralized reasoning, which can make you independent of any single mention. It is not enough to run the machine learning model and trust the output in a decentralized network. Suppose I ask the network to use it to analyze the governance dilemma. How do I know that it is actually not used? It has provided me with a worse analysis and pocketed the difference. In a centralized world, you may believe that companies like this will do this honestly because their reputation is threatened, and the quality is self-evident to some extent, but in a decentralized world. Honesty is not hypothetical but verified, which is where verifiable inference comes into play. In addition to providing a response to the query, you can also prove that it works correctly on the model you requested, but how? The simplest way is to run the model as a smart contract on the chain, which will definitely ensure that the output is verified, but this is very unrealistic. It means that if you want to do a matrix multiplication of this scale on the chain, it will cost about 100 million dollars at the current price. Calculation will fill every block for about a month in a row, so no, we need a different method. After observing the whole situation, I know that there have been three main methods to solve verifiable reasoning, zero-knowledge proof, optimistic fraud proof and encryption economics, each of which has its own security and cost impact. Imagine that you can prove that you have run a large model, but no matter how big the model is, it is actually a fixed size. This is what the magic of passing bears. Although it sounds elegant in principle, it is extremely difficult to compile the deep neural network into a zero-knowledge circuit and prove it, and its cost is also very high. At least you may see times the reasoning cost and times the delay in generating the proof, not to mention compiling the model itself into a circuit before all this happens. Eventually, the cost must be passed on to the user, so it will eventually be very expensive for the end user. On the other hand, it is the only way to ensure the correctness through cryptography. No matter how hard a provider tries, it can't cheat, but the cost of doing so is huge, which makes it impractical for a large model in the foreseeable future. An example of optimistic fraud proves that the optimistic method is trust, but we assume that the inference is correct unless it is proved otherwise. If a node tries to cheat, observers in the network can point out cheaters and use fraud proof to challenge them. These observers must always observe the chain and rerun the inference on their own models to ensure that. The output is correct. These frauds prove to be style interactive challenge response games. You can repeatedly divide the execution trajectory of the model in the chain until you find an error. If this happens, the cost will be very high because these programs are very large and have huge internal states. The cost of a single reasoning is about floating-point operation, but the game theory shows that this situation will almost never happen. As we all know, fraud proves to be difficult to code correctly because the code will almost never be attacked in production. Optimistic is good. The point is that as long as there is an honest observer paying attention to machine learning, the cost is safer than cheaper, but please remember that every observer in the network will rerun each query on his own, which means that if there is an observer, the security cost must be passed on to the users, so they will have to pay more than twice the reasoning cost, or no matter how many observers are as optimistic as the summary, the disadvantage is that you have to wait for the challenge period to pass before you can be sure that the response has been verified, but according to the network parameterization. You may have to wait a few minutes instead of a few days. Although encryption economics is not specified at present, here we give up all fancy technologies and do simple things. Equity-weighted voting users decide how many nodes should run their queries, and each node will display their responses. If there is a difference between the responses, then strange nodes will be cut off from the standard prediction machine. This is a more direct way for users to set the security level they want. Balance cost and trust. If they are doing machine learning, they will do so. The delay here is very fast. You only need the submission display of each node. If it is written into the blockchain, it may technically happen in two blocks. However, if it is cunning enough, most nodes can rationally choose collusion as users. You must infer how risky these nodes are and how much cheating will cost, that is to say, using things such as remortgage and attributable security. The network can effectively provide insurance in the case of security failure, but the advantage of this system is that users can specify the degree of security they want. They can choose to include nodes or nodes or every node in the network in arbitration, or if they want, they can even choose the cost function here. It is very simple that users pay for the legal number of nodes they want. If they choose, they need to pay twice the reasoning cost. Here is a thorny question: Can you make security in a simple one? In the single implementation, if no one checks a single node, you should cheat every time, but I doubt that if you encrypt the query and pay by intention, you may be able to confuse the nodes. They are actually the only nodes that respond to this task. In this case, you may be able to charge ordinary users less than twice the reasoning cost. The final encryption economic method is the simplest, easiest and possibly cheapest, but it is the least sexy in principle and the least secure, but as always, the details are decided. Examples of success or failure Although it is not specified why verifiable machine learning is difficult at present, you may want to know why we don't have all this. After all, in essence, the machine learning model is only a very large computer program. For a long time, the correct execution of the program has been the basis of the blockchain. This is why these three verification methods reflect the way that the blockchain protects its block space, and it is not surprising that most blockchains use encryption economics. We have come up with basically the same solution, so what makes it difficult to apply it to machine learning? 比特币今日价格行情网_okx交易所app_永续合约_比特币怎么买卖交易_虚拟币交易所平台

注册有任何问题请添加 微信:MVIP619 拉你进入群

打开微信扫一扫

添加客服

进入交流群

1.本站遵循行业规范,任何转载的稿件都会明确标注作者和来源;2.本站的原创文章,请转载时务必注明文章作者和来源,不尊重原创的行为我们将追究责任;3.作者投稿可能会经我们编辑修改或补充。